Previous parts:

Web app backends have many standard responsibilities: user account management, sessions, billing and others. These are all incredibly important, but the handling of these responsibilities is not any different in a 3D web app compared to any other web app. So, following the theme of this series, we'll omit these topics in our consideration of backend and focus on the responsibilities that arise specifically when 3D data is involved.

Backend roles

As we talked in part 2, the client-server architecture prescribes that the server is a central authority of the app. It manages storage, runs business-value or computationally intensive data processing. For apps handling 3D data there are some unique responsibilities, summarized below. They shouldn't necessarily all be a part of your 3D web application, but are meant to provide a sense of the range of things a server can be assigned.

Model storage

In the most simple case, there would be a catalog of specially prepared 3D models for the sole purpose of displaying them to the users, for example in a product showcase, configurator, or a virtual tour. This kind of database can be highly homogeneous: all models in the same format, optimized for faster transfer and display on the client.

When the users supply their own models, storage differs in that the models come in different formats and are of varying quality and complexity. Depending on the scenario, it might make sense to normalize such incoming data by converting it to a common format. For example, an online CAD tool would convert the imported data to its own internal representation for further modeling. Client visualization is also better off converting data to a single format (e.g. glTF for Three.js or CDXFB for CAD Exchanger Web Toolkit). On the contrary, an online PLM system would need to store and operate the original files.

Conversion of user-supplied data

3D data comes in a variety of formats targeted at various use cases and it can be quite limiting to force your users to supply models in specific formats only. So the conversion is necessary if you've decided to store the data in common format, or if you have to output models in another format for downstream applications (e.g. supplying cleaned up user-provided CAD designs to manufacturing shops in a custom part manufacturing application).

Extraction of information

This is a broad category of tasks including things like collection of a bill of materials (BOM) from the model's hierarchical structure, measuring the models' properties (volumes, surface area, etc.), extraction of metadata for use in domain-specific workflows (e.g. BIM project management), inspection of geometry for low-level purposes (e.g. model quality analysis and object identification). Extensive model inspection capabilities also come in handy when it's necessary to convert the model to a custom format for further processing.

Screenshot and thumbnail generation

The most common use of this type of processing is to generate preview images to use in web pages instead of the 3D model viewer itself. Another, vastly different use case is generation of renders of a 3D model.

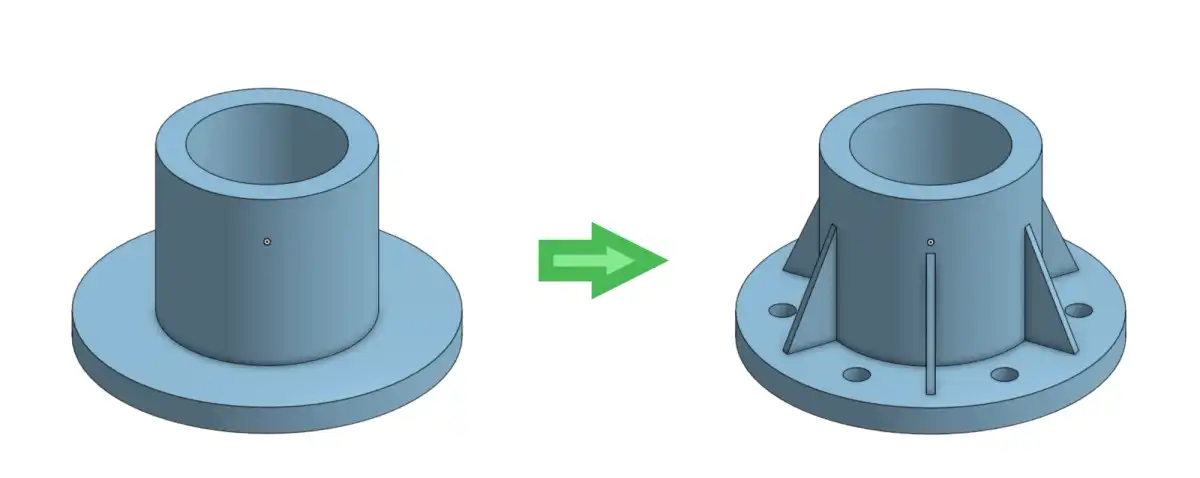

Meshing

Meshing is relevant mostly for CAD models, where meshing is necessary to display them. It's also useful for many types of algorithmic analysis, such as collision detection and engineering simulations.

Sophisticated model analysis and processing

This is another broad category where I include things like detection of geometric problems and analysis of manufacturability. It requires access to the entirety of the model's contents, but obviously also sophisticated algorithms on top of that to draw the necessary conclusions from the model. Procedures like model simplification and geometry cleanup also fall in this category. They modify the models' contents, but in a directed and controlled fashion.

General model editing and model production

For more general cases, where the users are offered greater freedom (e.g. 3D editor), it is necessary to provide the ability to do various modeling operations that deeply modify the geometry, structure and metadata of the 3D model.

Just like the range of things you can do with 3D data on your backend is varied, the range of tools to perform these tasks is huge. It includes commercial products and open-source software, established and up-and-coming libraries, highly-specific tools and all-encompassing toolkits. To navigate the rules it makes sense to:

- break up the app into responsibilities according to the classification above, or in any other sensible manner, then look for tools taking care of required tasks;

- obtain as early as possible in the development a representative collection of 3D data and test your candidate tools against this data.

APIs or libraries?

The tools to perform the aforementioned tasks may come in the form of libraries (further used for both true libraries and any locally run software packages) or as a cloud service with some API. The choice between the API and a library/package is sensible to take seriously and make it based on the needs of your application. Here are the factors you might want to consider:

- Ease of integration. APIs are generally easier to integrate in your application due to them being programming language-agnostic. The libraries are usually provided for a limited set of languages, and if your backend is not one of these languages, wrapping will be necessary.

- Impact on app architecture. When using an API, no additional requirements are placed on the execution environment and the app structure. Integration of a library has to take into account the app architecture and maybe even modify it (e.g. if separate services need to be created for the processing in question). If the library's list of supported platforms is not very extensive, the choice of execution platform becomes very important too.

- Performance and network overhead. Using APIs incurs the overhead of contacting another service over the internet. It also requires you to trust the third party provider that their service is going to be available and perform the operation quickly. A locally deployed library does not guarantee swift execution, but at least more performance parameters are under the control of the developer and can be influenced by architectural choices, like allocating separate and more powerful hardware for performance-critical workflows.

- Data security. When using the APIs you invariably share the 3D data with a third party, which normally doesn't happen with libraries. Depending on the profile of your users and their expectations of data confidentiality, APIs then might not be an option at all.

- Licensing. The APIs are guaranteed to be commercial products, since maintaining one incurs operational expenses. On the other hand, there are free-to-use libraries for 3D data workflows. When they're free-to-use, licensing can still differ between the two options. APIs normally employ volume-based licensing, whereas libraries and software packages can use a variety of licensing methods, some of which can turn out more economical (e.g. when piping massive amounts of data through conversion).

A common scenario is to choose APIs for early stage projects - MVPs, proofs of concept, etc. This makes sense because at these stages in the project's life velocity matters the most, so flexibility and ease of use are paramount, while performance, reliability and security concerns temporarily take a back seat. Volume-based licensing models of APIs are also useful for modest loads that are normally exhibited at this stage. As the project moves away from MVP toward a fleshed out product, priorities change and throughput, reliability and security become more important, which can tip the scales towards the local processing.

Backend execution environments

There are multiple choices as to where to run the server portion of the application. This question is a bit less specific for 3D web apps and is more of a general consideration when building any 3D web app. So let's see possible options:

- Physical dedicated servers. Requires a lot of overhead for administration, but provides ultimate control.

- Virtual machines allow us (or physical server provider) finer control over computational resources. They also provide an ability to scale the number of logical servers in use, by spinning up and shutting them down based on the usage.

- Containers take the idea of the virtual machine further; being more lightweight they start up and shut down faster, simplifying elastic scaling.

- Cloud providers (AWS, Azure, Google Cloud) take away the necessity to manage your own hardware and provide the environment to run the same virtual machines and containers and manage scaling policies.

- Serverless computing (AWS Lambda, Azure Functions and Google Cloud Functions) allows the developer to only provide the code to run, and completely manages the environment.

Two things that stand out in relation to 3D data in this landscape are data security and tool compatibility. Manually managing the infrastructure on one hand makes sure that the user's 3D data doesn't leave the perimeter of your business. If you trust the cloud providers with your users' 3D data, they normally provide reasonable guarantees regarding the security on infrastructure level. This isn't to say that your app is automatically bulletproof if it runs in Azure, just that the range of possible security issues is narrowed down. On the other hand, the data is formally stored on the 3rd party's hardware, which might ultimately pose a problem for sensitive clients (e.g. in the military sector).

The other point concerns your backend code and the tools it uses. The 3D data processing, conversion and analysis tools are often written in native languages, such as C and C++, which are somewhat picky about the environment they must be run in. Anything that provides OS-level capabilities, such as VMs and containers is fine for this code. However, the serverless computing services are usually incompatible with such software, because they target managed languages with standard package management tooling. This can be a bummer, because 3D data processing tasks fit very nicely into the fundamentally stateless paradigm of serverless computing (e.g. data conversion, simplification).

Summary

Unlike the frontend, where the necessity to work with 3D data carries straightforward implications (3D view, WebGL rendering, one of a few common visualization libraries), on the backend the possibilities are immensely varied and the choices should be dictated by the app's required functionality. Because of that, it's hard to provide specific advice to a general audience. So instead, in this post we touched upon a few important considerations and described the options, which hopefully makes the landscape a bit easier to navigate.

In the next post in this series we'll step back from the client-server architecture and take a look at tools that take care of complete end-to-end web app creation.

This 3D visualization is powered by CAD Exchanger Web Toolkit - a library for interactive CAD data visualization. Find out more and request a trial here.

Learn more:

How To Load 3D CAD Data Into Three.js. Part 1

How To Load 3D CAD Data Into Three.js. Part 2

Building 3D web applications with CAD Exchanger Web Toolkit

Next parts: