Previous parts:

Over the course of the previous parts, we've been exploring the various considerations that arise when building web applications that specifically operate on 3D data. The key observation throughout the whole series is that the app's purpose defines its structure and the tools best suited to it. But this kind of perspective can feel a bit too abstract and removed from practice, so in this post, we'll take a look at the process of building a specific app and explore its context and how that influences its structure and the tools being used.

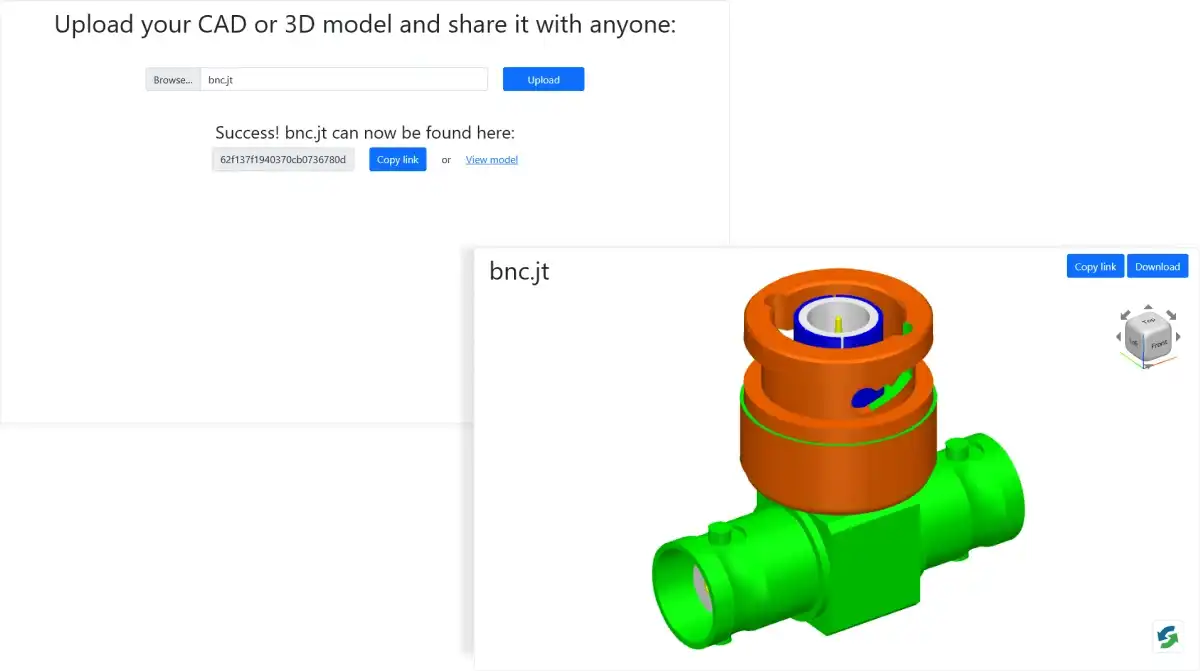

CAD model sharing app

To keep things simple, we'll be building a service for sharing CAD and 3D models of various formats - we'll call it ShareYourCAD. A user selects and uploads a model, it is assigned an identifier and is stored on the server. A unique URL is provided for the model, which a user can copy and share with a third party by email, a chat app, or in some other way. When the recipient visits this URL, they see the shared model in a 3D viewer and they can get a quick overview of what was sent to them before downloading the original model.

App structure, tech stack and user workflows

Here's a quick summary of the tech stack for the impatient:

- ASP.NET

- MongoDB

- vanilla JavaScript and Bootstrap

Let's get into the details and reasoning for it. This particular application requires centralized storage in order to enable sharing of data between various users, therefore a non-trivial server will be required. The visualization is not a key capability in this application, but rather a nice addition, and there won't be any specific demands to it, which is why we can settle on the basic client-server model with client-side rendering. More specifically, the server will process the user-provided models and optimize them for web visualization.

Storage

In order to keep track of shared models, the backend will require a database. Our data model will consist of a single collection of objects with the following shape:

class Share

{

string Id

ShareStatus Status

string FileName

DateTime CreatedAt

}We will only do stores and fetches by a share's ID, so we can get away with a straightforward NoSQL database, and will use MongoDB. A relational database can also handle this use case but requires a bit more setup while providing little benefit functionally for our usage scenario.

Language, framework and libraries

Normally, the choice of the tech stack is driven by organizational concerns (workforce availability, code reuse) or technical requirements (performance, available libraries). For the purposes of our example, these things don't matter, so we will work from the requirement of 3D data processing and visualization. We will be a bit opinionated here and use CAD Exchanger SDK for data preparation and visualization and let that drive other stack decisions. Since the SDK has a C# API, for convenience we'll pick ASP.NET as our backend framework. CAD Exchanger APIs can also be used directly in Spring and Django apps (in Java and Python respectively), but will have to be wrapped in a CLI utility to work in other major backend languages - JavaScript, TypeScript, Go, PHP, etc.

The UI on the client will be quite basic and require minimal interactivity, so we'll get away with vanilla JavaScript and Bootstrap.

Workflows

Our app should enable two workflows - Uploader and Recipient.

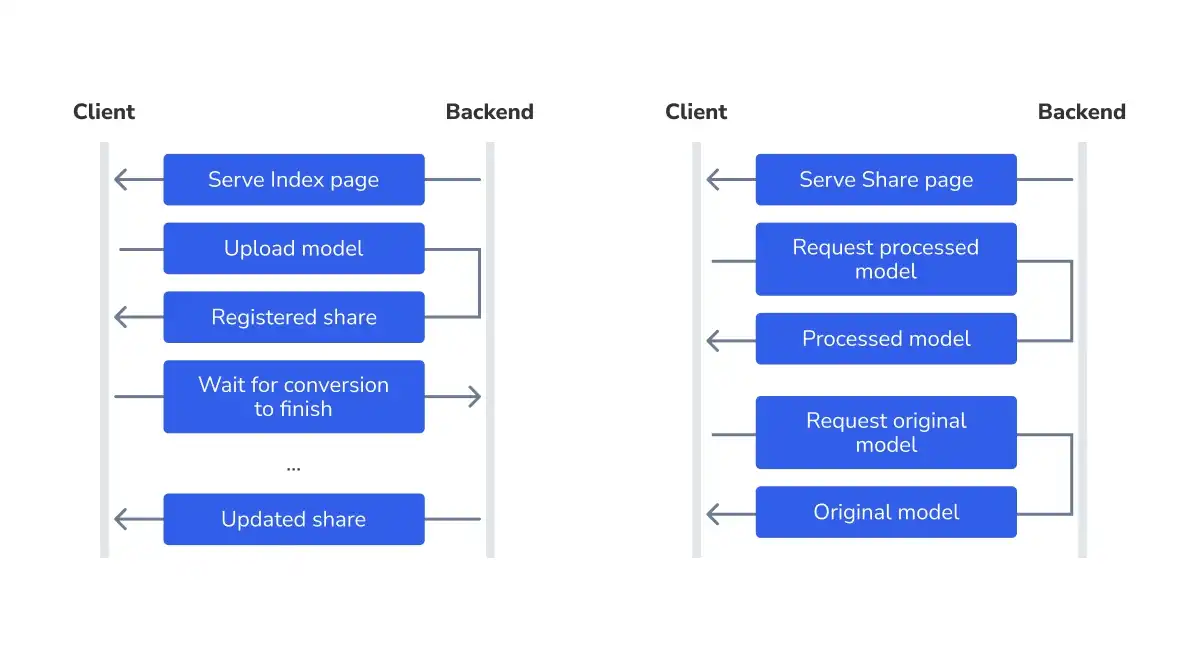

Uploader workflow

The uploader visits the Index page, selects a model and submits it. Upon submission, we will keep the user on the page and provide a link to a shared model once it's processed. To avoid page reload we will use Fetch API to submit a form with a model file. The backend will return the response after the basic initial processing of the model and start a conversion to visualization format in the background, which might take a while if the model is large. The client will make another request to receive the notification when the processing of the model finishes. Then it will update the UI with a unique link to a shared model.

Recipient workflow

The recipient accesses the shared model via a link the uploader got once the conversion is finished. The workflow of the recipient is far more simple - they just open a specific page and can do 3 things: view the model, copy its URL and download the original shared model. Both viewing and download will involve a request to the server for data.

Setup

To start, we'll make sure .NET SDK and MongoDB are installed and create an ASP.NET Core project from an MVC template. We'll also install a MongoDB driver package to access the database from our code and the FluentScheduler package for a reason we'll explain later.

> dotnet new mvc -o ShareYourCAD

> cd ShareYourCAD

> dotnet add package MongoDB.Driver

> dotnet add package FluentSchedulerTo get started on the Uploader workflow we will first create HTML markup for the index page, where the model upload will be performed. The project template we created already has an index page located at Views/Index.cshtml. We'll be using the ASP.NET Core-specific Razor HTML template syntax and a pinch of Bootstrap for easy styling. The index page needs a simple form with a file upload input, so we replace the template index page contents with the following markup:

@{

ViewData["Title"] = "Home Page";

}

@section scripts{

<script src="~/js/index.js"></script>

}

<h1 class="text-center mb-5">Upload your CAD or 3D model and share it with anyone:</h1>

<form id="upload-form" class="text-center">

<div class="row justify-content-md-center">

<div class="col col-md-6">

<input id="model-file-input" type="file" name="modelFile" class="form-control">

</div>

<div class="col-auto">

<input id="upload-button" type="submit" value="Upload" class="btn btn-primary upload-btn">

</div>

</div>

</form>Next, we create a new JavaScript source file index.js in the wwwroot/js subfolder and write a form submission event handler in JavaScript using Fetch API.

let uploadForm = document.getElementById('upload-form');

uploadForm.addEventListener('submit', async (event) => {

event.preventDefault();

let modelFileInput = document.getElementById('model-file-input');

if (modelFileInput.value === '') {

return;

}

let formData = new FormData(event.target);

let share = await fetch('/Share', {

method: 'post',

body: formData

}).then(res => res.json());

});To see where the URL used in this handler comes from, let's turn our attention to the backend.

Backend structure

Our backend will contain these routes for resources we want to provide:

-

Pages

/Home/Index- start page with model upload form;/Home/Share/{id}- shared model's page, indexed with share ID.

-

API endpoints for client-side JavaScript code

/Share- for uploading new models;/Share/{id}/Wait- for requesting a notification on the model processing status;/Share/{id}/Model- for retrieving processed model for viewing;/Share/{id}/Original- for downloading original model file.

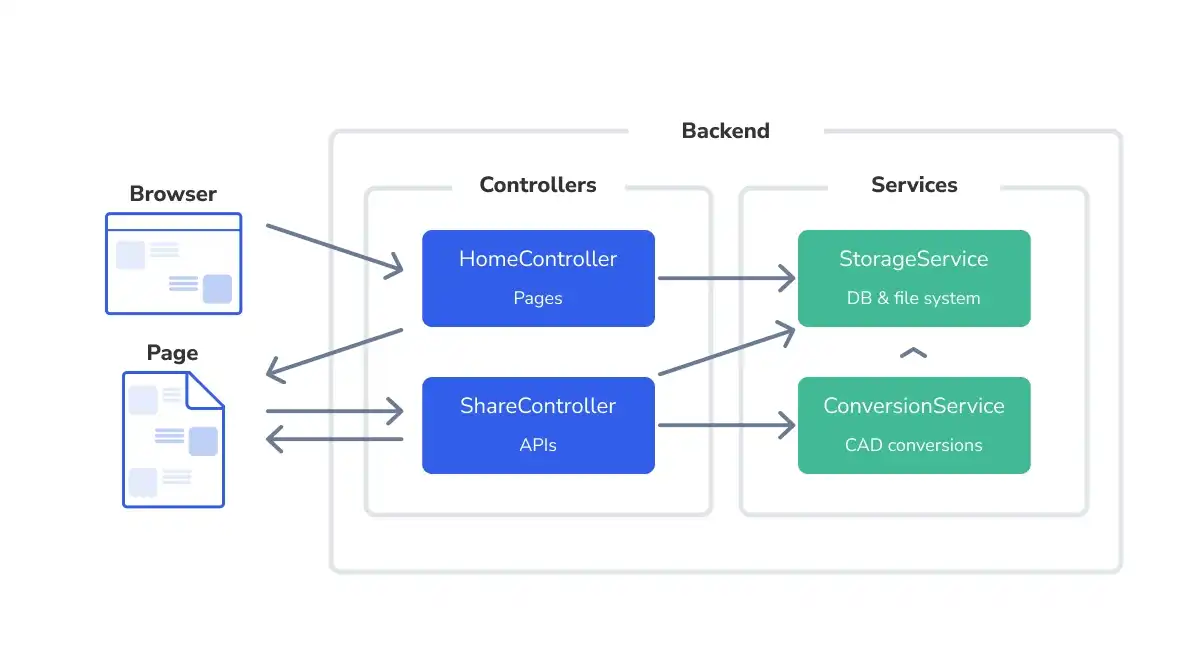

In ASP.NET Core, like in many MVC frameworks, the routes are defined by controllers stored in the Controllers subfolder of the project. It's also customary to separate concerns into different controllers, so we'll have two controllers - HomeController and ShareController, one for each prefix.

In the main app file Program.cs we should update the route pattern to include the ID parameter for /Home/Share/{id} route. The routes of ShareController will be set explicitly, so the pattern does not need to include them.

app.MapControllerRoute(

name: "default",

pattern: "{controller=Home}/{action=Index}/{id?}");Setting up the model portion of our app, we implement the following class in the Models/Share.cs file. The Share can have one of a few statuses, indicating the various steps in its lifecycle. Of special interest is the Expired status - a real app should purge the outdated shares once in a while to keep the storage requirements under control. We'll be doing that too, deleting the files associated with a share. We won't be deleting the share record itself though - we'll only be flagging it as expired to disambiguate the cases of the share never having existed and having been deleted due to its age. Another thing to note is the lack of model files themselves in the Share class. In order not to bloat the database, we'll be storing the files on the file system.

using MongoDB.Bson;

using MongoDB.Bson.Serialization.Attributes;

namespace ShareYourCAD.Models;

public enum ShareStatus {

Submitted,

InProcessing,

Error,

Ready,

Expired

}

public class Share

{

[BsonId]

[BsonRepresentation(BsonType.ObjectId)]

public string? Id { get; set; }

public ShareStatus Status { get; set; }

public string FileName { get; set; }

public DateTime CreatedAt { get; set; }

public Share(string fileName)

{

Status = ShareStatus.Submitted;

FileName = fileName;

CreatedAt = DateTime.Now;

}

}We will also need some services in order to separate the concerns on the backend and not keep all the logic within the controller (which is a notorious antipattern). The business logic of the app that encodes the lifecycle of the shared model is very minimal so we'll bend the rules a bit and will implement it in the controller. However, the implementation details will be encapsulated into two services:

StorageService- interfaces with the database and model file storage on a file system;ConversionService- performs CAD model processing using CAD Exchanger SDK.

The structure of our backend and relationships between components are illustrated by this diagram:

Accepting shared model on the backend

We'll start by defining ShareController and a POST request handler for /Share route inside it. The controller receives a logger and a StorageService through the dependency injection mechanism, which enables the implicit provision of services to the client code via internal ASP.NET machinery.

using Microsoft.AspNetCore.Mvc;

using ShareYourCAD.Models;

using ShareYourCAD.Services;

namespace ShareYourCAD.Controllers;

[Route("[controller]")]

[ApiController]

public class ShareController : Controller

{

private readonly ILogger<ShareController> _logger;

private StorageService _storageService;

public ShareController(ILogger<ShareController> logger,

StorageService storageService)

{

_logger = logger;

_storageService = storageService;

}

[HttpPost("")]

public async Task<IActionResult> PostShare(IFormFile modelFile)

{

// ...

}

}In the controller action PostShare we want to enable the first steps of a share lifecycle:

- create a share object;

- deposit it to the database;

- save the uploaded model to the file system;

- launch the conversion in the background;

- return the share object in its current state to the client to tell it that the upload succeeded.

Note that here we are also injecting an instance of ConversionService, but at the level of method, since this service is not needed for every class method.

public async Task<IActionResult> PostShare(IFormFile modelFile,

[FromServices] ConversionService conversionService)

{

_logger.LogDebug($"Received {modelFile.Name}...");

Share share = new Share(modelFile.FileName);

await _storageService.CreateShare(share);

await _storageService.SaveOriginalModel(share, modelFile);

conversionService.RunProcessModel(share, async isSuccess =>

{

if (isSuccess)

{

share.Status = ShareStatus.Ready;

}

else

{

share.Status = ShareStatus.Error;

}

await _storageService.UpdateShare(share);

});

return Ok(share);

}The deposit of a newly created share into the database is performed by the StorageService, so we'll look at it next. But first, the StorageService has to be set up to work with the database and file system.

StorageService configuration

For this we will use two additional model classes for representing configuration options (residing in Models/Settings/ subfolder):

namespace ShareYourCAD.Models.Settings;

public class SharesDatabaseSettings

{

public string ConnectionString { get; set; } = null!;

public string DatabaseName { get; set; } = null!;

public string CollectionName { get; set; } = null!;

}

public class FileStorageSettings

{

public string Location { get; set; } = null!;

}In the main file Program.cs, we will add lines instructing the builder to fetch these settings from a configuration file and instantiate a service. We are opting for the service to be a singleton since we have a single database and file system:

builder.Services.Configure<SharesDatabaseSettings>(

builder.Configuration.GetSection("SharesDatabase"));

builder.Services.Configure<FileStorageSettings>(

builder.Configuration.GetSection("FileStorage"));

builder.Services.AddSingleton<StorageService>();These values will be populated from a section of a configuration file appsettings.json that looks like this:

{

"SharesDatabase": {

"ConnectionString": "mongodb://localhost:27017",

"DatabaseName": "ShareYourCAD",

"CollectionName": "Shares"

},

"FileStorage": {

"Location": "%TEMP%/ShareYourCAD"

}

}The StorageService pulls these configuration values with dependency injection and uses them to open a DB connection. The path to the folder for original and processed files in the file system is simply saved.

using Microsoft.Extensions.Options;

using MongoDB.Driver;

using ShareYourCAD.Models;

using ShareYourCAD.Models.Settings;

namespace ShareYourCAD.Services;

public class StorageService

{

private readonly IMongoCollection<Share> _sharesCollection;

private readonly string _fileStorageLocation;

public StorageService(IOptions<SharesDatabaseSettings> databaseSettings,

IOptions<FileStorageSettings> fileStorageSettings)

{

var mongoClient = new MongoClient(databaseSettings.Value.ConnectionString);

var mongoDatabase = mongoClient.GetDatabase(databaseSettings.Value.DatabaseName);

_sharesCollection = mongoDatabase.GetCollection<Share>(

databaseSettings.Value.CollectionName);

_fileStorageLocation = fileStorageSettings.Value.Location;

}+

}Back to the StorageService main functionality. Its interface contains two sections - one for dealing with the database and another for dealing with the filesystem. Functions in the database section are simply shortcuts for MongoDB operations that we're going to need. Here we see that the CreateShare() function used above in the controller action stores the model object in the database. There are also functions for retrieving and updating objects.

#region database-access

public async Task CreateShare(Share share)

{

await _sharesCollection.InsertOneAsync(share);

}

public async Task UpdateShare(Share share)

{

await _sharesCollection.ReplaceOneAsync(x => x.Id == share.Id, share);

}

public async Task<Share?> GetShare(string id)

{

Share? share = await _sharesCollection.Find(

x => id == x.Id).FirstOrDefaultAsync();

return share;

}

...

#endregionThe code for saving the model to the file system is quite straightforward. We're encapsulating the exact paths and directory structure of our model storage within the service. All the files pertaining to a given shared model reside in the <shareId> subfolder of the storage root. The original model is saved there with the name original.<ext>, where <ext> stands for the original file extension. We erase the name of the file here as an effort to sanitize user-supplied data. This is just one of the measures that are appropriate for an app like ours and we'll mention other security considerations later.

#region filesystem-access

public async Task SaveOriginalModel(Share share, IFormFile modelFile)

{

if (!Directory.Exists(GetModelFileStoragePrefix(share)))

{

Directory.CreateDirectory(GetModelFileStoragePrefix(share));

}

string originalPath = GetOriginalModelStoragePath(share);

using (var originalFile = new FileStream(originalPath, FileMode.Create))

{

await modelFile.CopyToAsync(originalFile);

}

}

public string GetOriginalModelStoragePath(Share share)

{

return Path.Combine(GetModelFileStoragePrefix(share),

$"original{Path.GetExtension(share.FileName)}");

}

private string GetModelFileStoragePrefix(Share share)

{

string filesFolder = Path.Combine(_fileStorageLocation, share.Id!);

return filesFolder;

}

...

#endregionOnce the share is properly set up on the backend, comes the time of processing the model in order to prepare it for visualization.

Background model processing

The model upload controller action awaits the share registration in the database, but the model processing can potentially take a long time, so the function from ConversionService is not asynchronous and is not awaited. It only starts the background conversion process and then exits, allowing the backend to return the current status of the share. Let's take a closer look at that service.

In order to enable the processing of the model, we will add CAD Exchanger SDK to the project. First, obtain the CAD Exchanger SDK evaluation, download the package and unarchive it into the cadexsdk subfolder of our project. Place the license key cadex_license.cs into the root of the project. Add the dependency on the SDK directly to ShareYourCAD.csproj.

<ItemGroup>

<Compile Remove="cadexsdk/**" />

</ItemGroup>

<ItemGroup>

<Reference Include="CadExCoreNet">

<HintPath>cadexsdk/csharp/netstandard2.0/CadExCoreNet.dll</HintPath>

</Reference>

</ItemGroup>The ConversionService will utilize CAD Exchanger SDK for model reading and writing, so the cadex namespace is imported. It will also rely on the StorageService (injected as usual) to read the original and save the processed model files. The constructor of the service handles the injected dependencies and activates the license for CAD Exchanger SDK. But our main interest lies in the RunProcessModel() method, which calls the actual method responsible for model processing and saves the respective Task (C#'s version of Future or Promise). The dictionary of processing tasks is used to allow the notification of the client that the processing is finished, which we'll consider in a bit. Finally, it calls a post-processing step, which in our case will update the Share model object status depending on the result of processing (see the callback passed to RunProcessModel() in the ShareController.PostShare()).

using System.Collections.Concurrent;

using ShareYourCAD.Models;

using cadex;

namespace ShareYourCAD2.Services;

public class ConversionService

{

private readonly ILogger<ConversionService> _logger;

private StorageService _storageService;

private ConcurrentDictionary<string, Task> _processingTasks = new ConcurrentDictionary<string, Task>();

public ConversionService(ILogger<ConversionService> logger,

StorageService storageService)

{

_logger = logger;

_storageService = storageService;

string key = LicenseKey.Value();

if (!LicenseManager.Activate(key))

{

throw new ApplicationException("Failed to activate CAD Exchanger license");

}

}

public void RunProcessModel(Share share, Action<bool> postProcess)

{

Task processingTask = ProcessModel(share).ContinueWith(

t => postProcess(t.Result));

_processingTasks.TryAdd(share.Id!, processingTask);

}

...

}The code of actual processing is presented below. Using CAD Exchanger SDK we import the original model and then export it to CDXFB format, optimized for web visualization. Because SDK API primarily works with files on disk, and not with streams, ConversionService has to stick its nose a bit into the StorageService and request the file paths for the original and processed models to know where to read from and write to. It's possible to do some dependency inversion if StorageService was to provide an API accepting callbacks that would call the SDK's code. However, for this simple example we'll keep things as is.

private async Task<bool> ProcessModel(Share share)

{

share.Status = ShareStatus.InProcessing;

await _storageService.UpdateShare(share);

// Read CAD model

ModelData_ModelReader reader = new ModelData_ModelReader();

ModelData_Model model = new ModelData_Model();

string originalPath = _storageService.GetOriginalModelStoragePath(share);

bool result = reader.Read(new Base_UTF16String(originalPath), model);

if (!result)

{

_logger.LogError($"Import of model {share.Id} failed");

return false;

}

// Write CDXFB model

ModelData_ModelWriter writer = new ModelData_ModelWriter();

ModelData_WriterParameters param = new ModelData_WriterParameters();

param.SetFileFormat(ModelData_WriterParameters.FileFormatType.Cdxfb);

writer.SetWriterParameters(param);

string cdxfbDirectory = _storageService.GetProcessedModelStoragePath(share);

string cdxfbFile = Path.Combine(cdxfbDirectory, "scenegraph.cdxfb");

result = writer.Write(model, new Base_UTF16String(cdxfbFile));

if (!result)

{

_logger.LogError($"CDXFB export of model {share.Id} failed");

return false;

}

return true;

}When the processing finishes, the original request has already been handled, so the notification of the client of the result mandates another request. So, after the client received the original response it should poll the server again for the status of the model processing. For that, we have a separate endpoint - /Share/{id}/Wait, which facilitates long polling (returning a response only once processing is over). To this end, the ConversionService provides a method to wait on the conversion task for a given share ID.

public async Task WaitForProcessingTask(string id)

{

Task? task;

if (_processingTasks.TryRemove(id, out task))

{

await task;

}

}And the ShareController action processing the request for notification on the end of processing uses this method. The "long polling" part is enabled by awaiting the call to the ConversionService. And once the processing finishes, we fetch the updated value of the Share model object and return it to the client.

[HttpGet("{id}/Wait")]

public async Task<IActionResult> WaitForShare(string id,

[FromServices] ConversionService conversionService)

{

await conversionService.WaitForProcessingTask(id);

return Ok(await _storageService.GetShare(id));

}All this machinery is used on the client like this. The client checks the original response and if the processing is in progress, illustrates this by showing a spinner in the upload button. Then it makes a request to the /Share/{id}/Wait endpoint. After that endpoint returns a response, the UI is updated according to the status of the share at that point. For a successfully processed share, we show the link to it and a Copy Link button. For a share that failed processing, we show the error message.

let bodyRoot = document.getElementById('body-root');

let dynamicContent = null;

uploadForm.addEventListener('submit', async (event) => {

event.preventDefault();

clearDynamicContent(dynamicContent);

...

if (share.status === 'Submitted' || share.status === 'InProcessing') {

dynamicContent = showProcessingStatus();

share = await fetch(`/Share/${share.id}/Wait`).then(res => res.json());

clearDynamicContent(dynamicContent);

if (share.status === 'Ready') {

dynamicContent = showProcessedShareLinks(share);

} else { // if (share.status === 'Error')

dynamicContent = showErrorShare();

}

}

});The dynamicContent object is used here to keep track of dynamically changing UI elements. We won't look at the code of these functions here, but they can be found in our GitHub repo.

Another important thing is that by default the status enum is serialized into JSON as an integer. In order to serialize it as a string to simplify the processing on the client, the following configuration can be done on the backend:

using System.Text.Json.Serialization;

...

builder.Services.AddControllersWithViews()

.AddJsonOptions(opts =>

{

var enumConverter = new JsonStringEnumConverter();

opts.JsonSerializerOptions.Converters.Add(enumConverter);

});Serving and displaying shared models

Now we get to the Recipient workflow. Once again, we'll start by creating the HTML layout for the shared model's page Views/Share.cshtml. The first directive defines the view-model class that will be provided for HTML Razor template substitutions. We'll be using the normal model Share class here for simplicity. If the model contained more properties it would've made sense to separate a streamlined view-model class. First substitutions are made into the <script> tag defining global constants for the rest of the client-side code to use. The contents of the view itself will depend on the status of the share. If the share is expired we only want to display the relevant message.

@model ShareYourCAD.Models.Share

@{

ViewData["Title"] = Model!.FileName;

}

@section scripts{

<script>

const SHARE_ID = '@Model.Id';

const SHARE_STATUS = '@Model.Status.ToString()';

</script>

<script src="https://cdn.jsdelivr.net/npm/@@cadexchanger/web-toolkit@1.2.5/build/cadex.bundle.js"></script>

<script src="~/js/share.js"></script>

}

@if (Model.Status == ShareStatus.Expired)

{

<div class="row justify-content-center">

<p class="col-md-6 fs-5 text-center">This shared model has expired and was removed.</p>

</div>

}

else

{

...

}Otherwise, we'll need a header bar with a shared file name and "Copy link" and "Download" buttons. If the share has the status Ready, we'll render a container for the 3D viewer, and if not, display an appropriate message under the header.

<div id="share-header" class="row justify-content-between">

<div class="col-auto">

<h1>@Model.FileName</h1>

</div>

<div id="share-buttons" class="col-auto">

<button id="copy-link-button" type="button" class="btn btn-primary">Copy link</button>

<a id="download-link" role="button" class="btn btn-primary"

href="/Share/@Model.Id/Original" download="@Model.FileName">

Download

</a>

</div>

</div>

@if (Model.Status == ShareStatus.Ready)

{

<div class="viewer-container row">

<div id="model-viewer" class="col-12 h-100"></div>

</div>

}

else

{

<div class="row justify-content-center">

@if (Model.Status == ShareStatus.Error)

{

<p class="col-md-6 fs-6 mt-5 text-center">

The model was not processed successfully.

You can still download the original file.

</p>

}

else

{

<p class="col-md-6 fs-6 mt-5 text-center">

The model is still processing. Try again in a short while.

</p>

}

</div>

}The backend serves this page via the Share action of the HomeController. Unlike in the Index action, here we query the StorageService for the share with a given ID and pass the returned share to the view to be used for substitutions during HTML template rendering. If the share was not found at all, the template is not used and instead the "Not Found" page is returned.

public async Task<IActionResult> Share(string? id)

{

Share? share = await _storageService.GetShare(id!);

if (share != null)

{

return View(share);

}

return NotFound("The requested shared model does not exist.");

}The client-side code resides in the share.js script we've included in the HTML template. The script further sets up the page, enabling the functionality of the "Copy Link" button, creating the scene and viewport needed for the 3D viewer and attaching them to the DOM. The latter are provided by the cadex.bundle.js script included in the HTML template as well - it's a version of the CAD Exchanger Web Toolkit library bundled with its 3rd-party dependencies.

function setupPage() {

if (SHARE_STATUS === 'Expired') {

return;

}

let copyLinkButton = document.getElementById('copy-link-button');

copyLinkButton.addEventListener('click', _ => {

navigator.clipboard.writeText(window.location);

});

if (SHARE_STATUS !== 'Ready') {

return;

}

// set up the viewer; load and display the model

const scene = new cadex.ModelPrs_Scene();

const viewPort = new cadex.ModelPrs_ViewPort({

showViewCube: true,

cameraType: cadex.ModelPrs_CameraProjectionType.Perspective,

autoResize: true,

}, document.getElementById('model-viewer'));

viewPort.attachToScene(scene);

loadAndDisplayModel(SHARE_ID, viewPort, scene);

}

setupPage();Above we're also including a few scripts. cadex.bundle.js is the version of the CAD Exchanger Web Toolkit library we will use for the 3D viewer that bundles all required third-party libraries. The final touch to the client-side code is the creation of a 3D viewer with the help of the CAD Exchanger Web Toolkit. We create a model, a scene and a viewport that attaches to a <div> element in the DOM.

let aModel = new cadex.ModelData_Model();

// Create scene and viewport

const aScene = new cadex.ModelPrs_Scene();

const aViewPort = new cadex.ModelPrs_ViewPort({

showViewCube: true,

cameraType: cadex.ModelPrs_CameraProjectionType.Perspective,

autoResize: true,

}, document.getElementById('model-viewer'));

aViewPort.attachToScene(aScene);

loadAndDisplayModel(SHARE_ID);The loadAndDisplayModel() function fetches the processed model from the server using the dataLoader() helper, inserts the model contents into the scene and adjusts the camera so that the entire model is visible.

async function dataLoader(shareId, subFileName) {

const res = await fetch(`/Share/${shareId}/Model/${subFileName}`);

if (res.status === 200) {

return res.arrayBuffer();

}

throw new Error(res.statusText);

}

async function loadAndDisplayModel(shareId, viewPort, scene) {

try {

let model = new cadex.ModelData_Model();

const loadResult = await model.loadFile(shareId, dataLoader, false /*append roots*/);

let repSelector = new cadex.ModelData_RepresentationMaskSelector(

cadex.ModelData_RepresentationMask.ModelData_RM_Any);

await cadex.ModelPrs_DisplayerApplier.apply(loadResult.roots, [], {

displayer: new cadex.ModelPrs_SceneDisplayer(scene),

displayMode: cadex.ModelPrs_DisplayMode.Shaded,

repSelector: repSelector

});

// Auto adjust camera settings to look to whole model

viewPort.fitAll();

} catch (theErr) {

...

}

}It's important to note that the second parameter to dataLoader() denotes the component of the processed model. The processed CDXFB model is actually a collection of individual files, which enables lazy loading and fetching of the data from the server. This behavior is matched by the implementation of the backend: the endpoint for fetching the processed model returns it by components. There is also another endpoint for fetching the original model when the user requests the download. Both these controller actions are simple and just forward the request to the StorageService.

[HttpGet("{id}/Model/{component}")]

public async Task<IActionResult> GetProcessedModelComponent(string id, string component)

{

Share? share = await _storageService.GetShare(id);

if (share == null)

{

return NotFound("Share not found");

}

else if (share.Status != ShareStatus.Ready)

{

return NotFound("Processed model not found. Share is not ready; check share status.");

}

Stream? stream = _storageService.GetProcessedModelComponent(share, component);

if (stream == null)

{

_logger.LogError($"Missing processed model for ready share {id}");

return NotFound();

}

return File(stream, "application/octet-stream");

}

[HttpGet("{id}/Original")]

public async Task<IActionResult> GetOriginalModel(string id)

{

Share? share = await _storageService.GetShare(id);

if (share == null)

{

return NotFound("Share not found");

}

Stream? stream = _storageService.GetOriginalModel(share);

if (stream == null) {

_logger.LogError($"Missing original model for share {id}");

return NotFound();

}

return File(stream, "application/octet-stream");

}Finally, for both the original and the processed models the StorageService just takes the requested files from the disk, if it finds any:

public Stream? GetProcessedModelComponent(Share share, string component)

{

string componentPath = Path.Combine(GetProcessedModelStoragePath(share), component);

if (File.Exists(componentPath))

{

return new FileStream(componentPath, FileMode.Open);

}

else

{

return null;

}

}

public Stream? GetOriginalModel(Share share)

{

string filePath = GetOriginalModelStoragePath(share);

if (File.Exists(filePath))

{

return new FileStream(filePath, FileMode.Open);

}

else

{

return null;

}

}Expiration of shares and processing tasks

The application lifecycle that we described in the beginning is almost completely done. The only thing left is the removal of data for shares that expired. If the app is used extensively, it only accumulates models, never removing them from the file system. In order to address this, we'll be using the library we pulled prior called FluentScheduler in order to schedule a regular job that will remove the model files for the shares older than one day.

First, we'll define the class that will actually perform the cleanup job - ExpiredSharesRemover. The class needs a single static method since there is no state to keep track of. Each time the scheduler calls it, it will query the StorageService for expired shares, update their status and remove the data from the disk. It's also provided the storage configuration with a maximum age of a share to keep and a logger to take note of what's been removed.

using ShareYourCAD.Models;

using ShareYourCAD.Services;

namespace ShareYourCAD.Utils;

public class ExpiredSharesRemover

{

public static async Task Run(StorageService _storageService,

Models.Settings.FileStorageSettings _config,

ILogger<StorageService> _logger)

{

DateTime expirationCutoff =

(DateTime.Now - TimeSpan.FromSeconds(_config.MaxAgeSeconds));

var expiredShares =

await _storageService.GetExpirationCandidateShares(expirationCutoff);

int numExpiredShares = 0;

foreach (Share expiredShare in expiredShares)

{

expiredShare.Status = ShareStatus.Expired;

await _storageService.UpdateShare(expiredShare);

_storageService.RemoveModels(expiredShare);

numExpiredShares += 1;

}

_logger.LogInformation($"Deleted {numExpiredShares} expired shares...");

}

}The implementation of model removal in StorageService is trivial. The GetExpirationCandidateShares from StorageService runs a query in the database to return the shares that are not yet marked as expired, but whose age is beyond the cutoff.

public async Task<IEnumerable<Share>> GetExpirationCandidateShares(DateTime expirationCutoff)

{

using (var cursor = await _sharesCollection.FindAsync(

s => s.Status != ShareStatus.Expired && s.CreatedAt < expirationCutoff))

{

return await cursor.ToListAsync();

}

}Once all this machinery is ready we can set up the scheduler to run the ExpiredSharesRemover. We'll do so in the StorageService constructor since it seems like an appropriate place, and we'll use another value from the storage configuration for the periodicity of this cleanup job.

using Fluent = FluentScheduler;

...

public StorageService(IOptions<SharesDatabaseSettings> databaseSettings,

IOptions<FileStorageSettings> fileStorageSettings,

ILogger<StorageService> logger)

{

...

// Schedule file cleanup

Fluent.JobManager.Initialize();

Fluent.JobManager.AddJob(

async () =>

{

await ExpiredSharesRemover.Run(this, fileStorageSettings.Value, logger);

},

s => s.ToRunEvery(fileStorageSettings.Value.PurgePeriodSeconds).Seconds());

}This process would then expire the shares that have become older than a day in the last hour.

This concludes the description of the major implementation points of our simple app. Some less relevant details were omitted: for example, we haven't written client-side code for the cases where the shared model fails processing, the share is expired or not found. These details can be found in the full code example available on GitHub.

Here's a GIF showing the main workflow that we have implemented today:

Where to go from here

There are also things that we deliberately treated as out of scope for this simple app, but which would be important for anything targeting real-world production usage.

- Accepting user data is risky, and doubly so when it's in the form of file uploads from unregistered users. A robust solution would need to run the basic validation on the client side, checking at least that the file type is one of the supported ones, then take precautions on the server side - isolate files on a separate service with minimal contact area with the rest of the application, run virus scans, sanitize the filenames before storing them to the database.

- Concurrent uploads will now trigger concurrent model processing tasks running in the same process as the web server. When hit by many clients simultaneously, the server will timeout as all worker threads would be busy processing uploaded models. A better approach is to have a pool of workers small enough not to congest the hardware resources, that can run at most one processing job at a time per worker. The models submitted later would then have to wait. It's also prudent to separate these workers into a service of their own and supply them jobs through a message queue. This would yield increased fault tolerance and speedy processing of models uploaded earlier.

Summary

In this installment of the series, we've explored building a simple web application with 3D features based on CAD Exchanger technologies. We've run through the app structure and key design decisions and outlined the major implementation points. We've also touched upon the topics that need to be addressed before scaling up this app from a prototype that we have now to a state where it can be used in the real world.

The 3D visualization in our app was powered by CAD Exchanger Web Toolkit - a library for interactive CAD data visualization. Find out more and request a trial here.

Learn more:

How To Load 3D CAD Data Into Three.js. Part 1